Reflecting on the tenets that shape our educational practices is fundamental for …

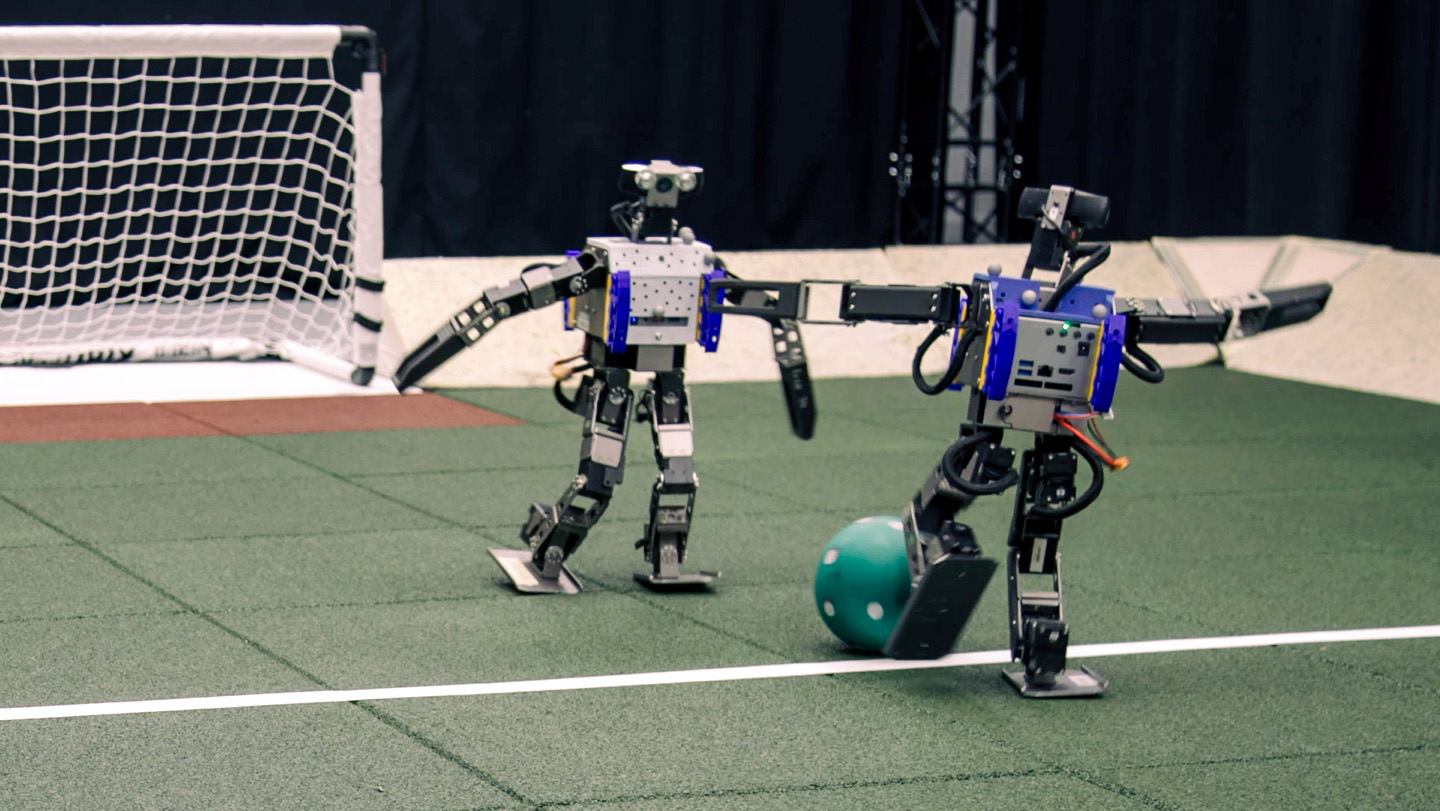

Soccer-playing robots demonstrate agility of AI-driven machines

Carlos Changemaker

ChatGPT and various artificial intelligence tools are already reshaping our online experiences. Now, AI interactions are poised to extend into the physical realm.

Utilizing a specific type of AI, humanoid robots have the potential to demonstrate superior ability to perceive and respond to their surroundings compared to earlier robotic iterations. These agile robots could prove invaluable in industrial settings, space exploration, and other realms.

The focal point of this development lies in reinforcement learning AI. Recent publications in Science Robotics underscore the potential of this approach in imbuing robots with human-like dexterity.

“Significant advancements have been witnessed in AI applications in digital environments, such as with tools like GPT,” notes computer scientist Ilija Radosavovic from the University of California, Berkeley. However, Radosavovic suggests that the potential for AI in physical environments could far exceed current achievements.

Traditionally, software controlling bipedal robots has leaned on model-based predictive control methods. These require robots to follow pre-set instructions for movement, as seen in examples like the Atlas robot from Boston Dynamics, known for its parkour prowess. However, such robots necessitate intricate programming and struggle to adapt to novel situations.

Reinforcement learning poses a more promising approach, allowing AI models to learn independently through trial and error, rather than relying on predetermined instructions.

“Our goal was to explore the boundaries of reinforcement learning in practical robot applications,” explains computer scientist Tuomas Haarnoja from Google DeepMind in London. In one of their recent studies, Haarnoja’s team successfully employed an AI system to control a 20-inch-tall toy robot named OP3, teaching it not just to walk but also to engage in one-on-one soccer matches.

Soccer bots

Researcher Guy Lever from Google DeepMind in London describes soccer as an ideal environment to study general reinforcement learning due to its demands for planning, agility, exploration, and competition.

The compact size of the robots allowed the team to conduct numerous experiments and learn from mistakes, a luxury not afforded by larger robots which are more complex to handle. Prior to testing on physical robots, the AI software underwent training on virtual counterparts.

The training process unfolded in two stages. Initially, one AI model learned to raise a virtual robot off the ground through reinforcement learning, while a second model figured out goal-scoring maneuvers without tipping over.

The AI models processed information on joint positions and object movements in the virtual world, enabling them to direct the virtual robot’s actions. Reward mechanisms reinforced successful behaviors, hastening learning.

In the subsequent phase, a third AI model was trained to replicate the actions of the first two models and learn effective strategies against its own clones.

In preparation for real-world robot control, the researchers diversified environmental factors in the virtual setting, tweaking variables like friction levels and sensory input delays. The AI was also incentivized for actions beyond goal-scoring, such as motion patterns that minimize knee stress.

.cheat-sheet-cta {

border: 1px solid #ffffff;

margin-top: 20px;

background-image: url(“https://www.snexplores.org/wp-content/uploads/2022/12/cta-module@2x-2048×239-1.png”);

padding: 10px;

clear: both;

}

Have a scientific inquiry? We’re here to assist!

Pose your question here, and you may find it featured in an upcoming edition of Science News Explores

Subsequently, the AI was primed to direct real robots, resulting in significantly improved performance metrics. The AI-controlled robots exhibited nearly double the walking speed, triple the turning agility, and faster recovery times for standing up, compared to those guided by traditional instruction-based software.

These advanced robots demonstrated enhanced motor skills, showcasing the capacity to smoothly execute sequences of actions.

Radosavovic expresses satisfaction: “The AI software not only mastered individual soccer maneuvers but also grasped the strategic elements crucial for gameplay, like intercepting an opponent’s shot.”

Roboticist Joonho Lee from ETH Zurich applauds the breakthrough, noting the remarkable resilience displayed by the humanoid soccer players.

Transition to Full Scale

In the second study, Radosavovic and associates collaborated on training an AI system to govern Digit, a 1.5-meter-tall humanoid robot with ostrich-like backward-bending knees.

This endeavor mirrored Google DeepMind’s approach, leveraging reinforcement learning for AI training. However, Radosavovic’s team employed a transformer AI model, commonly found in large language models like ChatGPT.

Large language models harness input data to enhance output. Similarly, the AI overseeing Digit analyzed past actions to predict future maneuvers, based on the robot’s sensory and motor readings from the previous 16 time frames, equivalent to about one-third of a second.

Similar to the soccer scenario, the AI model initially refined its control skills on virtual robots, navigating terrains encompassing slopes and trip-causing cables.

Following virtual training, the AI confidently steered a physical robot through outdoor trials without toppling over. Back in the lab, the bot withstood impacts from an inflatable ball, showcasing its reliable performance under various conditions.

Overall, the AI system excelled in robotic control, effortlessly traversing ground planks and surmounting obstacles like stairs despite lacking prior training on such elements.

Advancing Forward

In recent years, reinforcement learning has gained traction in governing quadrupedal robots. These latest studies underscore the adaptability of this approach for two-legged counterparts, showcasing their performance equivalence or superiority in comparison to instruction-based robots, remarks computer scientist Pulkit Agrawal from MIT.

Future AI-driven robots may necessitate the nimbleness exhibited by Google DeepMind’s soccer players and the versatile terrain navigation showcased by Digit. Lever emphasizes the significance of these dual capabilities for robotic and AI advancements, citing their longstanding importance as grand challenges.