During this summer, a team of students from MIT embarked on a journey to the sou …

Artificial intelligence poses challenges for distinguishing truth from fiction

Jennifer Livingstone

Taylor Swift, the multi-award-winning music icon, has garnered numerous accolades and world records. However, in January, she found herself at the center of a disturbing situation beyond her control—online abuse.

Artificial intelligence (AI) was employed to generate fabricated nude images of Swift, which inundated social media platforms. Despite her fans’ swift response with calls to #ProtectTaylorSwift, the fake images persisted.

This incident is just one instance of the widespread dissemination of fake media, including audio and visuals, easily created by non-experts using AI. Celebrities like Swift aren’t the sole targets of such reprehensible attacks. For instance, male peers circulated fake sexual images of female classmates at a high school in New Jersey last year.

Deepfakes, which encompass AI-generated fake pictures, audio clips, or videos posing as authentic content, have been utilized to manipulate politicians’ statements. In a recent case, a deepfake recording of President Joe Biden was disseminated through robocalls, advising against voting in a primary election in New Hampshire. Additionally, a deepfake video seemingly supporting a pro-Russian political party leader was attributed to Moldovan President Maia Sandu.

Further, AI has been misused to fabricate false scientific and health-related information. An Australian group claimed that proposed wind turbines could lead to the deaths of 400 whales annually, citing a bogus study purportedly published in Marine Policy. However, the study was confirmed to be non-existent, revealing it as a fake article created using AI.

While deliberate misinformation and falsehoods have existed for years, the advancement of AI technology has facilitated the quicker and more cost-effective spread of unreliable claims. Despite efforts to detect and counter AI-generated fakes, concerns persist regarding the escalating technological capabilities and the challenge of keeping pace with evolving fake news phenomena.

Amid the proliferation of increasingly convincing fake content online, the veracity of information and sources has become increasingly challenging to discern.

Churning out Fakes

The process of creating realistic fake content, such as photos and news stories, previously demanded significant time and expertise, particularly in crafting deepfake audio and video clips. However, advancing AI technologies have democratized content fabrication, allowing nearly anyone to generate text, images, audio, or video content rapidly employing generative AI.

Recently, a team of healthcare researchers demonstrated the ease of generating disinformation by producing 102 blog articles comprising over 17,000 words of misleading information about vaccines and vaping within an hour using tools on OpenAI’s Playground platform.

Ashley Hopkins, a clinical epidemiologist at Flinders University in Adelaide, Australia, who played a role in this research, emphasized the surprising simplicity with which disinformation could be created using these AI tools.

Unlike conventional content creation, AI-generated articles are often produced with minimal oversight on various websites that disseminate false or deceptive news stories. Many of these sites lack transparency about their origins or ownership, contributing to the spread of misinformation.

Fake News (Really)

The graph illustrates the surge in unreliable AI-generated news websites identified by NewsGuard, escalating from 49 sites in May 2023 to over 600 by December, and surpassing 750 by March 2024.

Generative AI models leverage various techniques to produce convincing fake content. Text-generating models operate by predicting subsequent words within a context, in which extensive text data are utilized for training.

During the training phase, AI models learn the complex rules of grammar, word choice, and context by attempting to predict subsequent words based on feedback on their accuracy. This learning process allows AI models to create new content when prompted.

AI-generated image production employs techniques like generative adversarial networks (GANs) or diffusion models to generate realistic images. GANs consist of a generator and a discriminator, with the former tasked to produce increasingly realistic images while the latter identifies flawed aspects in the image generation process.

By engaging in a competitive process, GANs eventually produce images that deceive the discriminator, resulting in believable outputs. In contrast, diffusion models utilize a forward and backward procedure involving noise integration and removal to generate high-quality images based on extensive training data.

What’s Real? What’s Fake?

The remarkable capabilities of AI models at producing content have rendered it challenging for individuals to discern between authentic and fake content.

Behavioral scientist Todd Helmus from the RAND Corporation highlighted that AI-generated content often appears more authentic than human-created content, blurring the line between truth and falsehood.

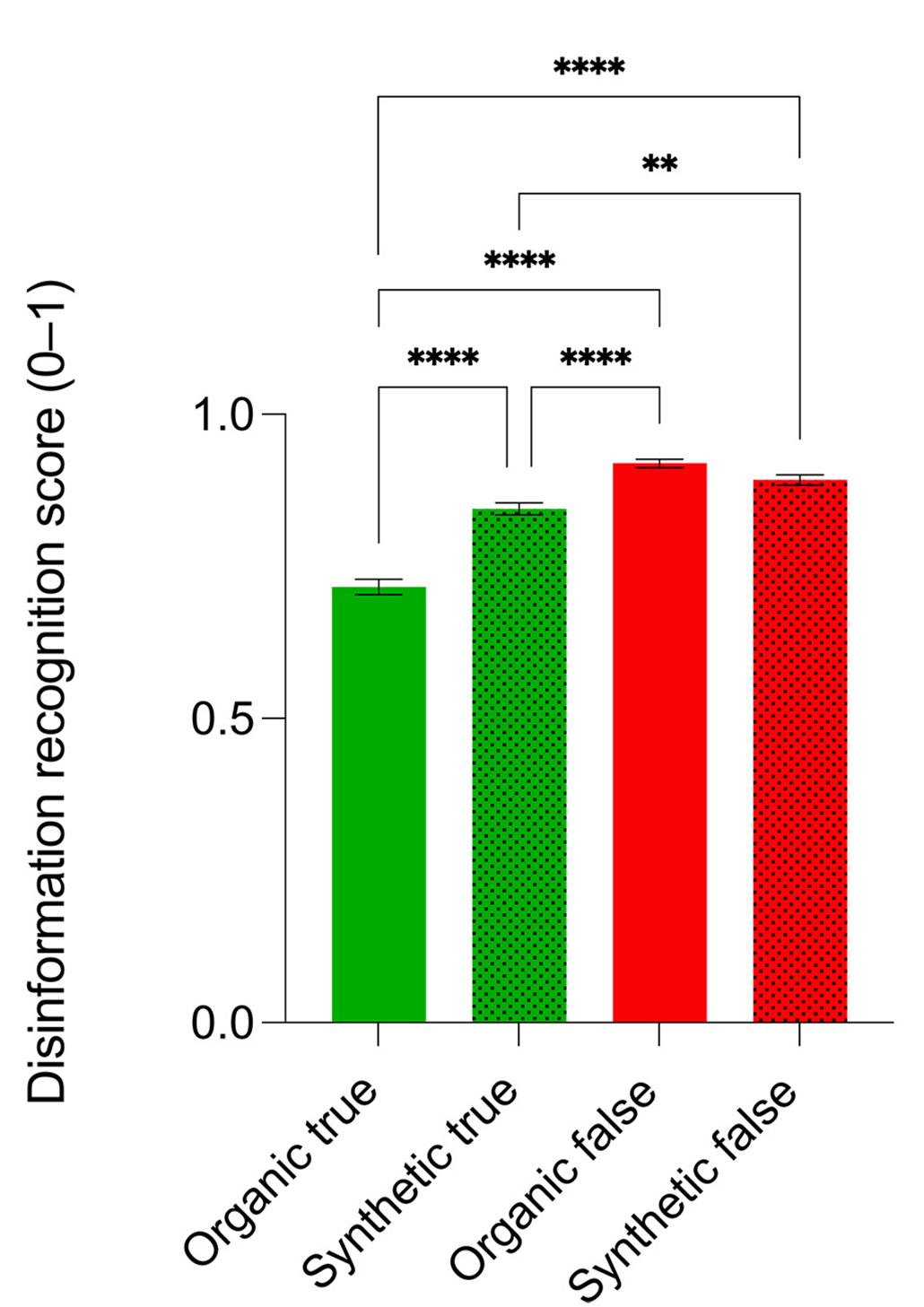

True or False?

Participants in a study overwhelmingly perceived synthetic posts made by AI as more accurate than organic posts crafted by real humans.

The study demonstrated that participants were more likely to believe false posts generated by AI models than those by actual individuals, indicating the sophistication of AI in mimicking human language.

In a study conducted by Federico Germani at the University of Zurich, AI models were shown to excel in replicating human language, even incorporating emotional elements that resonate with readers. The seamless integration of emotional language into AI-generated content enhances its persuasive impact and manipulative potential on readers.

How Can We Know What’s True Anymore?

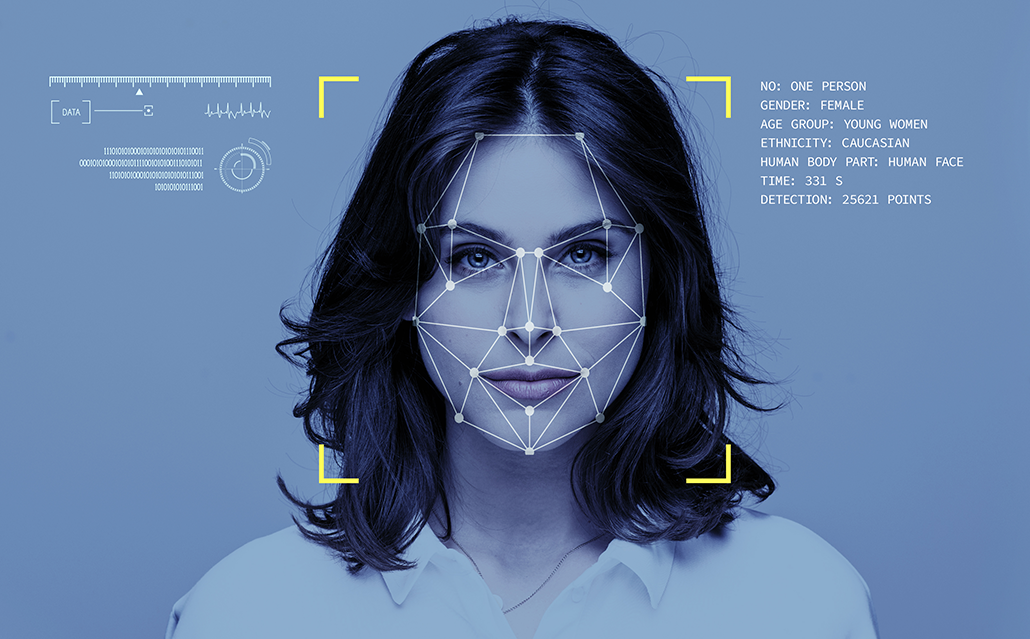

Traditionally, photos and videos served as vital proof of events; however, the prevalence of AI deepfakes has diminished the reliability of visual evidence.

Carl Vondrick, a computer scientist at Columbia University, emphasized the need for a critical reevaluation and skepticism towards photographic and video evidence in light of the prevalence of AI-manipulated content.

This growing distrust creates opportunities for disingenuous denial of factual events by individuals, as evidenced by occurrences such as U.S. presidential candidate Donald Trump’s unfounded allegations regarding an AI-concocted attack advertisement portraying him unfavorably.

.cheat-sheet-cta {.cheat-sheet-cta {

border: 1px solid #ffffff;

margin-top: 20px;

background-image: url(“https://www.snexplores.org/wp-content/uploads/2022/12/cta-module@2x-2048×239-1.png”);

padding: 10px;

clear: both;

}}

Do you have a science question? We can help!

Submit your question here, and we might answer it an upcoming issue of Science News Explores

One AI-detection tool showed promising efficiency in spotting AI-generated texts by analyzing the usage of proper nouns within the content. This tool outperformed other detection methods by leveraging discrepancies in proper noun usage typically encountered in AI-generated texts.

Germani underscored the importance of incorporating polite language in AI models to accelerate the dissemination of misinformation. Models trained to respond courteously align with human-to-human interactions, potentially aiding malicious actors in manipulating models to produce disinformation.

Efforts to combat AI-generated fake content are ongoing, with researchers exploring various detection strategies. While progress has been made in identifying AI-generated content, the dynamic nature of AI poses challenges in creating foolproof countermeasures against fake content.

As AI technology continues to evolve, the battle against AI-generated fakes remains an ongoing arms race, emphasizing the need for vigilance and proactive measures to combat the dissemination of fabricated content.

The regulatory framework surrounding AI content creation faces inherent limitations due to the ever-evolving nature of AI systems, as highlighted by Alondra Nelson. She advocates for policy directives that guide AI toward ethical and beneficial applications, underlining the necessity of preventing AI systems from engaging in deceptive practices.

Stringent controls and legal measures are in place to address the misuse of AI technologies, exemplified by President Biden’s executive order aimed at enforcing existing laws to counter fraudulent activities, biases, privacy breaches, and other AI-related harms. Regulatory bodies, such as the U.S. Federal Communications Commission, have taken action to prohibit AI-generated robocalls, with further legislative initiatives being deliberated.

What Can You Do?

Education emerges as a critical tool to shield against the deceptive allure of AI-generated disinformation. Foster a discerning mindset, treating all information with skepticism and evaluating its veracity to avoid falling victim to fake content, advises Todd Helmus.

Ruth Mayo from the Hebrew University of Jerusalem underscores the importance of sourcing information cautiously, highlighting the significance of relying on reputable sources for medical and factual information. Verifying authors, site credibility, and cross-referencing data from multiple sources are essential strategies for navigating the digital landscape fraught with misinformation.

Empowering individuals, particularly the younger generation, with the critical thinking skills to question textual and visual information fosters a more vigilant and discerning populace, as emphasized by Alondra Nelson. Through developing a media-literate society, we can counter the threat posed by AI-generated disinformation.